How many clinical-trial studies in medical journals are fake or fatally flawed? In October 2020, John Carlisle reported a startling estimate1.

Carlisle, an anaesthetist who works for England’s National Health Service, is renowned for his ability to spot dodgy data in medical trials. He is also an editor at the journal Anaesthesia, and in 2017, he decided to scour all the manuscripts he handled that reported a randomized controlled trial (RCT) — the gold standard of medical research. Over three years, he scrutinized more than 500 studies1.

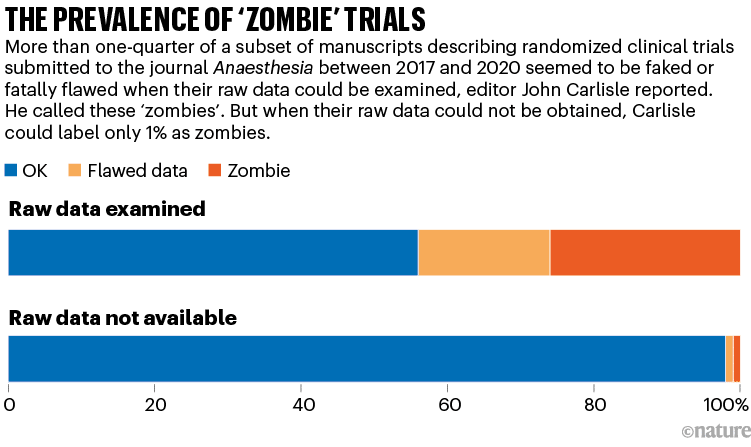

For more than 150 trials, Carlisle got access to anonymized individual participant data (IPD). By studying the IPD spreadsheets, he judged that 44% of these trials contained at least some flawed data: impossible statistics, incorrect calculations or duplicated numbers or figures, for instance. And 26% of the papers had problems that were so widespread that the trial was impossible to trust, he judged — either because the authors were incompetent, or because they had faked the data.

Carlisle called these ‘zombie’ trials because they had the semblance of real research, but closer scrutiny showed they were actually hollow shells, masquerading as reliable information. Even he was surprised by their prevalence. “I anticipated maybe one in ten,” he says.

When Carlisle couldn’t access a trial’s raw data, however, he could study only the aggregated information in the summary tables. Just 1% of these cases were zombies, and 2% had flawed data, he judged (see ‘The prevalence of ‘zombie’ trials’). This finding alarmed him, too: it suggested that, without access to the IPD — which journal editors usually don’t request and reviewers don’t see — even an experienced sleuth cannot spot hidden flaws.

Source: Ref. 1

“I think journals should assume that all submitted papers are potentially flawed and editors should review individual patient data before publishing randomised controlled trials,” Carlisle wrote in his report.

Carlisle rejected every zombie trial, but by now, almost three years later, most have been published in other journals — sometimes with different data to those submitted with the manuscript he had seen. He is writing to journal editors to alert them, but expects that little will be done.

Do Carlisle’s findings in anaesthesiology extend to other fields? For years, a number of scientists, physicians and data sleuths have argued that fake or unreliable trials are frighteningly widespread. They’ve scoured RCTs in various medical fields, such as women’s health, pain research, anaesthesiology, bone health and COVID-19, and have found dozens or hundreds of trials with seemingly statistically impossible data. Some, on the basis of their personal experiences, say that one-quarter of trials being untrustworthy might be an underestimate. “If you search for all randomized trials on a topic, about a third of the trials will be fabricated,” asserts Ian Roberts, an epidemiologist at the London School of Hygiene & Tropical Medicine.

The issue is, in part, a subset of the notorious paper-mill problem: over the past decade, journals in many fields have published tens of thousands of suspected fake papers, some of which are thought to have been produced by third-party firms, termed paper mills.

But faked or unreliable RCTs are a particularly dangerous threat. They not only are about medical interventions, but also can be laundered into respectability by being included in meta-analyses and systematic reviews, which thoroughly comb the literature to assess evidence for clinical treatments. Medical guidelines often cite such assessments, and physicians look to them when deciding how to treat patients.

Ben Mol, who specializes in obstetrics and gynaecology at Monash University in Melbourne, Australia, argues that as many as 20–30% of the RCTs included in systematic reviews in women’s health are suspect.

Many research-integrity specialists say that the problem exists, but its extent and impact are unclear. Some doubt whether the issue is as bad as the most alarming examples suggest. “We have to recognize that, in the field of high-quality evidence, we increasingly have a lot of noise. There are some good people championing that and producing really scary statistics. But there are also a lot in the academic community who think this is scaremongering,” says Žarko Alfirević, a specialist in fetal and maternal medicine at the University of Liverpool, UK.

This year, he and others are conducting more studies to assess how bad the problem is. Initial results from a study led by Alfirević are not encouraging.

Laundering fake trials

Medical research has always had fraudsters. Roberts, for instance, first came across the issue when he co-authored a 2005 systematic review for the Cochrane Collaboration, a prestigious group whose reviews of medical research evidence are often used to shape clinical practice. The review suggested that high doses of a sugary solution could reduce death after head injury. But Roberts retracted it2 after doubts arose about three of the key trials cited in the paper, all authored by the same Brazilian neurosurgeon, Julio Cruz. (Roberts never discovered whether the trials were fake, because Cruz died by suicide before investigations began. Cruz’s articles have not been retracted.)

A more recent example is that of Yoshihiro Sato, a Japanese bone-health researcher. Sato, who died in 2016, fabricated data in dozens of trials of drugs or supplements that might prevent bone fracture. He has 113 retracted papers, according to a list compiled by the website Retraction Watch. His work has had a wide impact: researchers found that 27 of Sato’s retracted RCTs had been cited by 88 systematic reviews and clinical guidelines, some of which had informed Japan’s recommended treatments for osteoporosis3.

Some of the findings in about half of these reviews would have changed had Sato’s trials been excluded, says Alison Avenell, a medical researcher at the University of Aberdeen, UK. She, along with medical researchers Andrew Grey, Mark Bolland and Greg Gamble, all at the University of Auckland in New Zealand, have pushed universities to investigate Sato’s work and monitored its influence. “It probably diverted people from being given more effective treatment for fracture prevention,” Avenell says.

Anaesthetist John Carlisle at work.Credit: Emli Bendixen

The concerns over zombie trials, however, are beyond individual fakers flying under the radar. In some fields, swathes of RCTs from different research groups might be unreliable, researchers worry.

During the pandemic, for instance, a flurry of RCTs was conducted into whether ivermectin, an anti-parasite drug, could treat COVID-19. But researchers who were not involved have since pointed out data flaws in many of the studies, some of which have been retracted. A 2022 update of a Cochrane review argued that more than 40% of these RCTs were untrustworthy4.

“Untrustworthy work must be removed from systematic reviews,” says Stephanie Weibel, a biologist at the University of Wuerzberg in Germany, who co-authored the review.

In maternal health — another field seemingly rife with problems — Roberts and Mol have flagged studies into whether a drug called tranexamic acid can stem dangerously heavy bleeding after childbirth. Every year, around 14 million people experience this condition, and some 70,000 die: it is the world’s leading cause of maternal death.

In 2016, Roberts reviewed evidence for using tranexamic acid to treat serious blood loss after childbirth. He reported that many of the 26 RCTs investigating the drug had serious flaws. Some had identical text, others had data inconsistencies or no records of ethical approval. Some seemed not to have adequately randomized the assignment of their participants to control and treatment groups5.

When he followed up with individual authors to ask for more details and raw data, he generally got no response or was told that records were missing or had been lost because of computer theft. Fortunately, in 2017, a large, high-quality multi-centre trial, which Roberts helped to run, established that the drug was effective6. It’s likely, says Roberts, that in these and other such cases, some of the dubious trials were copycat fraud — researchers saw that a large trial was going on and produced small, substandard copies that no one would question. This kind of fraud isn’t a victimless crime, however. “It results in narrowed confidence intervals such that the results look much more certain than they are. It also has the potential to amplify a wrong result, suggesting that treatments work when they don’t,” he says.

That might have happened for another question: what if doctors were to inject the drug into everyone undergoing a caesarean, just after they give birth, as a preventative measure? A 2021 review7 of 36 RCTs investigating this idea, involving a total of more than 10,000 participants, concluded that this would reduce the risk of heavy blood loss by 60%.

Yet this April, an enormous US-led RCT with 11,000 people reported only a slight and not statistically significant benefit8.

Mol thinks problems with some of the 36 previous RCTs explains the discrepancy. The 2021 meta-analysis had included one multi-centre study in France of more than 4,000 participants, which found a modest 16% reduction in severe blood loss, and another 35 smaller, single-centre studies, mostly conducted in India, Iran, Egypt and China, which collectively estimated a 93% drop. Many of the smaller RCTs were untrustworthy, says Mol, who has dug into some of them in detail.

It’s unclear whether the untrustworthy studies affected clinical practice. The World Health Organization (WHO) recommends using tranexamic acid to treat blood loss after childbirth, but it doesn’t have a guideline on preventive administration.

From four trials to one

Mol points to a different example in which untrustworthy trials might have influenced clinical practice. In 2018, researchers published a Cochrane review9 on whether giving steroids to people due to undergo caesarean-section births helped to reduce breathing problems in their babies. Steroids are good for a baby’s lungs but can harm the developing brain, says Mol; benefits generally outweigh harms when babies are born prematurely, but the balance is less clear when steroids are used later in pregnancy.

The authors of the 2018 review, led by Alexandros Sotiriadis, a specialist in maternal–fetal medicine at the Aristotle University of Thessaloniki in Greece, analysed the evidence for administering steroids to people delivering by caesarean later in pregnancy. They ended up with four RCTs: a British study from 2005 with more than 940 participants, and three Egyptian trials conducted between 2015 and 2018 that added another 3,000 people into the evidence base. The review concluded that the steroids “may” reduce rates of breathing problems; it was cited in more than 200 documents and some clinical guidelines.

In January 2021, however, Mol and others, who had looked in more depth into the papers, raised concerns about the Egyptian trials. The largest study, with nearly 1,300 participants, was based on the second author’s thesis, he noted — but the trial end dates in the thesis differed from the paper. And the reported ratio of male to female babies was an impossible 40% to 60%. Mol queried the other papers, too, and wrote to the authors, but says he did not get satisfactory replies. (One author told him he’d lost the data when moving house.) Mol’s team also reported statistical issues with some other works by the same authors.

In December 2021, Sotiriadis’s team updated its review10. But this time, it adopted a new screening protocol. Until that year, Cochrane reviews had aimed to include all relevant RCTs; if researchers spotted potential issues with a trial, using a ‘risk of bias’ checklist, they would downgrade their confidence in its findings, but not remove it from their analysis. But in 2021, Cochrane’s research-integrity team introduced new guidance: authors should try to identify ‘problematic’ or ‘untrustworthy’ trials and exclude them from reviews. Sotiriadis’s group now excluded all but the British research. With only one trial left, there was “insufficient data” to draw firm conclusions about the steroids, the researchers said.

By last May, as Retraction Watch reported, the large Egyptian trial was retracted (to the disagreement of its authors). The journal’s editors wrote in the retraction notice that they had not received its data or a satisfactory response from the authors, adding that “if the data is unreliable, women and babies are being harmed”. The other two trials are still under investigation by publisher Taylor & Francis as part of a larger case of papers, says Sabina Alam, director of publishing ethics at the firm. Before the 2018 review, some clinical guidelines had suggested that administering steroids later in pregnancy could be beneficial, and the practice had been growing in some countries, such as Australia, Mol has reported. The latest updated WHO and regional guidelines, however, recommend against this practice.

Overall, Mol and his colleagues have alleged problems in more than 800 published medical research papers, at least 500 of which are on RCTs. So far, the work has led to more than 80 retractions and 50 expressions of concern. Mol has focused much of his work on papers from countries in the Middle East, and particularly in Egypt. One researcher responded to some of his e-mails by accusing him of racism. Mol, however, says that it’s simply a fact that he has encountered many suspect statistics and refusals to share data from RCT authors in countries such as Iran, Egypt, Turkey and China — and that he should be able to point that out.

Screening for trustworthiness

“Ben Mol has undoubtedly been a pioneer in the field of detecting and fighting data falsification,” says Sotiriadis — but he adds that it is difficult to prove that a paper is falsified. Sotiriadis says he didn’t depend on Mol’s work when his team excluded those trials in its update, and he can’t say whether the trials were corrupt.

Instead, his group followed a screening protocol designed to check for ‘trustworthiness’. It had been developed by one of Cochrane’s independent specialist groups, the Cochrane Pregnancy and Childbirth (CPC) group, coordinated by Alfirević. (This April, Cochrane formally dissolved this group and some others, as part of a reorganization strategy.) It provides a detailed list of criteria that authors should follow to check the trustworthiness of an RCT — such as whether a trial is prospectively registered and whether the study is free of unusual statistics, such as implausibly narrow or wide distributions of mean values in participant height, weight or other characteristics, and other red flags. If RCTs fail the checks, then reviewers are instructed to contact the original study authors — and, if the replies are not adequate, to exclude the study.

“We’re championing the idea that, if a study doesn’t pass these bars, then no hard feelings, but we don’t call it trustworthy enough,” Alfirević explains.

For Sotiriadis, the merit of this protocol was that it avoided his having to declare the trials faulty or fraudulent; they had merely failed a test of trustworthiness. His team ultimately reported that it excluded the Egyptian trials because they hadn’t been prospectively registered and the authors didn’t explain why.

Other Cochrane authors are starting to adopt the same protocol. For instance, a review11 of drugs aiming to prevent pre-term labour, published last August, used it to exclude 44 studies — one-quarter of the 122 trials in the literature.

What counts as trustworthy?

Whether trustworthiness checks are sometimes unfair to the authors of RCTs, and exactly what should be checked to classify untrustworthy research, is still up for debate. In a 2021 editorial12 introducing the idea of trustworthiness screening, Lisa Bero, a senior research integrity editor at Cochrane, and a bioethicist at the University of Colorado Anschutz Medical Campus in Aurora, pointed out that there was no validated, universally agreed method.

“Misclassification of a genuine study as problematic could result in erroneous review conclusions. Misclassification could also lead to reputational damage to authors, legal consequences, and ethical issues associated with participants having taken part in research, only for it to be discounted,” she and two other researchers wrote.

For now, there are multiple trustworthiness protocols in play. In 2020, for instance, Avenell and others published REAPPRAISED, a checklist aimed more at journal editors. And when Weibel and others reviewed trials investigating ivermectin as a COVID-19 treatment last year, they created their own checklist, which they call a ‘research integrity assessment’.

Bero says some of these checks are more labour-intensive than editors and systematic reviewers are generally accustomed to. “We need to convince systematic reviewers that this is worth their time,” she says. She and others have consulted biomedical researchers, publishers and research-integrity experts to come up with a set of red flags that might serve as the basis for creating a widely agreed method of assessment.

Despite the concerns of researchers such as Mol, many scientists remain unsure how many reviews have been compromised by unreliable RCTs. This year, a team led by Jack Wilkinson, a health researcher at the University of Manchester, UK, is using the results of Bero’s consultation to apply a list of 76 trustworthiness checks to all trials cited in 50 published Cochrane reviews. (The 76 items include detailed examination of the data and statistics in trials, as well as inspecting details on funding, grants, trial registration, the plausibility of study methods and authors’ publication records — but, in this exercise, data from individual participants are not being requested.)

The aim is to see how many RCTs fail the checks, and what impact removing those trials would have on the reviews’ conclusions. Wilkinson says a team of 50 is working on the project. He aims to produce a general trustworthiness-screening tool, as well as a separate tool to aid in inspecting participant data, if authors provide them. He will discuss the work in September at Cochrane’s annual colloquium.

Alfirević’s team, meanwhile, has found in a study yet to be published that 25% of around 350 RCTs in 18 Cochrane reviews on nutrition and pregnancy would have failed trustworthiness checks, using the CPC’s method. With these RCTs excluded, the team found that one-third of the reviews would require updating because their findings would have changed. The researchers will report more details in September.

In Alfirević’s view, it doesn’t particularly matter which trustworthiness checks reviewers use, as long as they do something to scrutinize RCTs more closely. He warns that the numbers of systematic reviews and meta-analyses that journals publish have themselves been soaring in the past decade — and many of these reviews can’t be trusted because of shoddy screening methods. “An untrustworthy systematic review is far more dangerous than an untrustworthy primary study,” he says. “It is an industry that is completely out of hand, with little quality assurance.”

Roberts, who first published in 2015 his concerns over problematic medical research in systematic reviews13, says that the Cochrane organization took six years to respond and still isn’t taking the issue seriously enough. “If up to 25% of trials included in systematic reviews are fraudulent, then the whole Cochrane endeavour is suspect. Much of what we think we know based on systematic reviews is wrong,” he says.

Bero says that Cochrane consulted widely to develop its 2021 guide on addressing problematic trials, including incorporating suggestions from Roberts, other Cochrane reviewers and research-integrity experts.

Asking for data

Many researchers worried by medical fakery agree with Carlisle that it would help if journals routinely asked authors to share their IPD. “Asking for raw data would be a good policy. The default position has just been to trust the study, but we’ve been operating from quite a naive position,” says Wilkinson. That advice, however, runs counter to current practice at most medical journals.

In 2016, the International Committee of Medical Journal Editors (ICMJE), an influential body that sets policy for many major medical titles, had proposed requiring mandatory data-sharing from RCTs. But it got pushback — including over perceived risks to the privacy of trial participants who might not have consented to their data being shared, and the availability of resources for archiving the data. As a result, in the latest update to its guidance, in 2017, it settled for merely encouraging data sharing and requiring statements about whether and where data would be shared.

The ICMJE secretary, Christina Wee, says that “there are major feasibility challenges” to be resolved to mandate IPD sharing, although the committee might revisit its practices in future. Many publishers of medical journals told Nature’s news team that, following ICMJE advice, they didn’t require IPD from authors of trials. (These publishers included Springer Nature; Nature’s news team is editorially independent.)

Some journals, however — including Carlisle’s Anaesthesia — have gone further and do already require IPD. “Most authors provide the data when told it is a requirement,” Carlisle says.

Even when IPD are shared, says Wilkinson, scouring it in the way that Carlisle does is a time-consuming exercise — creating a further burden for reviewers — although computational checks of statistics might help.

Besides asking for data, journal editors could also speed up their decision-making, research-integrity specialists say. When sleuths raise concerns, editors should be prepared to put expressions of concern on medical studies more quickly if they don’t hear back from authors, Avenell says. This April, a UK parliamentary report into reproducibility and research integrity said that it should not take longer than two months for publishers to publish corrections or retractions of research when academics raise issues.

And if journals do retract studies, authors of systematic reviews should be required to correct their work, Avenell and others say. This rarely happens. Last year, for instance, Avenell’s team reported that it had carefully and repeatedly e-mailed authors and journal editors of the 88 reviews that cited Sato’s retracted trials to inform them that their reviews included retracted work. They got few responses — only 11 of the 88 reviews have been updated so far — suggesting that authors and editors didn’t generally care about correcting the reviews3.

That was dispiriting but not surprising to the team, which has previously recounted how institutional investigations into Sato’s work were opaque and inadequate. The Cochrane collaboration, for its part, stated in updated guidance in 2021 that systematic reviews must be updated when retractions occur.

Ultimately, a lingering question is — as with paper mills — why so many suspect RCTs are being produced in the first place. Mol, from his experiences investigating the Egyptian studies, blames lack of oversight and superficial assessments that promote academics on the basis of their number of publications, as well as the lack of stringent checks from institutions and journals on bad practices. Egyptian authorities have taken some steps to improve governance of trials, however; Egypt’s parliament, for instance, published its first clinical research law in December 2020.

“The solution’s got to be fixes at the source,” says Carlisle. “When this stuff is churned out, it’s like fighting a wildfire and failing.”

Artificial Intelligence (AI) & Plagiarism

"A growing concern is the use of artificial intelligence (AI) chatbots to write entire essays and articles. While it may be tempting to use AI in this way, please be aware that this is also considered a form of plagiarism."

Highly Recommended: Retraction Watch

There is a worrying amount of fraud in medical research

And a worrying unwillingness to do anything about it!!

In 2011 ben mol, a professor of obstetrics and gynaecology at Monash University, in Melbourne, came across a retraction notice for a study on uterine fibroids and infertility published by a researcher in Egypt. The journal which had published it was retracting it because it contained identical numbers to those in an earlier Spanish study—except that that one had been on uterine polyps. The author, it turned out, had simply copied parts of the polyp paper and changed the disease.

“From that moment I was alert,” says Dr Mol. And his alertness was not merely as a reader of published papers. He was also, at the time, an editor of the European Journal of Obstetrics and Gynaecology, and frequently also a peer reviewer for papers submitted to other journals. Sure enough, two papers containing apparently fabricated data soon landed on his desk. He rejected them. But, a year later, he came across them again, except with the fishy data changed, published in another journal.

Tenfold increase in scientific research papers retracted for fraud

Tenfold increase in scientific research papers retracted for fraud

The proportion of scientific research that is retracted due to fraud has increased tenfold since 1975, according to the most comprehensive analysis yet of how research papers go wrong.

The study, published on Monday in the Proceedings of the National Academy of Sciences (PNAS), found that more than two-thirds of the biomedical and life sciences papers that have been retracted from the scientific record are due to misconduct by researchers, rather than error.

The results add weight to recent concerns that scientific misconduct is on the rise and that fraud increasingly affects fields that underpin many areas of public concern, such as medicine and healthcare.

The authors said their findings could only be a conservative estimate of the true scale of scientific misconduct.

"The better the counterfeit, the less likely you are to find it – whatever we show, it is an underestimate," said Arturo Casadevall, professor of microbiology, immunology and medicine at the Albert Einstein College of Medicine in New York and an author on the study.

Casadevall and his colleagues examined 2,047 papers on the PubMed database that had been retracted from the biomedical literature through to May 2012.

The authors consulted secondary sources such as the US Office of Research Integrity and Retraction Watch blog, which highlights cases of scientific misconduct, to work out the reasons for each of the retractions.

Their results found that 67.4% of retractions were attributable to scientific misconduct and only 21.3% were down to error. The misconduct percentage was composed of fraud or suspected fraud (43.3%), duplicated publications (14.2%) and plagiarism (9.8%).

In addition, the long-term trend for misconduct was on the up: in 1976 there were only three retractions for misconduct out of 309,800 papers (0.00097%) whereas there were 83 retractions for misconduct out of 867,700 papers at a recent peak in 2007 (0.0096%).

Among recent and well-publicised cases of scientific fraud is that of the South Korean stem cell scientist Hwang Woo-suk, who was dismissed from his post at Seoul University in 2006 after fabricating research on stem cells.

Ferric Fang, a professor at the University of Washington school of medicine and co-author of the study, said the prevailing assumption that most retractions were down to honest error by researchers had been maintained for years because retraction notices in scientific journals were often opaque or misleading.

"Some give no information and others give the appearance of error but when you look at the more detailed report of what happened, it's clear that it's fraud," he said.

Ivan Oransky, editor of the Retraction Watch blog, praised the analysis carried out by Casadevall, Fang and their colleague Grant Steen. "It's now clear that the reason misconduct seemed to play a smaller role in retractions, according to previous studies, is that so many notices said nothing about why a paper was retracted.

"If scientific journals are as interested in correcting the literature as they'd like us to think they are, and want us to believe they're transparent, the ones that fail to include that information need to take a lesson from those that do."

The authors of the study said this could be due to the increased scrutiny placed on the research in these journals and the greater uncertainty associated with the most cutting-edge research. "Alternatively, the disproportionately high payoffs to scientists for publication in prestigious venues can be an incentive to perform work with excessive haste or to engage in unethical practices," they wrote in PNAS.

James Parry, director of the UK Research Integrity Office said that the principles of good research practice, such as being honest and objective, are often thought to be obvious. "Studies like this show that good research practice should be self-evident but often isn't."

Chris Chambers, a psychologist at the University of Cardiff said he found the results disturbing but not surprising. "They confirm that misconduct is increasing and is the most common reason for retraction, particularly in high impact journals. Above all this shows us how broken the incentive structure of science has become."

Casadevall said the pressures to commit fraud came from many sources - not least the competition for scarce funding for research. In addition, the disproportionate rewards given to scientists who publish in high-impact journals mean that some may succumb to the temptation of making their results seem more impressive than they actually are.

A first step to working out the true scale of the wider problem, though, would be to make journal retraction notices more transparent. "We're hoping the scientific publishing business sits down and tries to identify some standards," said Casadevall. "Right now the retraction notices are written by the authors and that probably makes sense because they know what the problem was. But we are learning that, in cases of fraud, there's a desire to make the issue confusing, that is not to admit it."

Fang said that, despite highlighting problems, it would be wrong to take the message away from the study that science is somehow untrustworthy as a whole. "People who are opposed to science for their own reasons cannot take any solace in our findings as supporting them. You could look at this the opposite way and say that we find that a very few papers end up retracted. This is a very strong endorsement of the overall quality of science. That said, we would like the fraction to be even smaller."

Chambers said that the studies such as this would be vital in making science more robust. "They turn scientific methods to back on science itself. Self-scrutiny stops us from fooling ourselves and shows where we need to improve our culture and practices. I'd even go so far as to say that studies like this are more important than much of the science that gets reported."

Erik Boetto1,

http://orcid.org/0000-0001-7331-9520Davide Golinelli1,

Gherardo Carullo2,

Maria Pia Fantini1Correspondence to Dr Davide Golinelli, Department of Biomedical and Neuromotor Sciences, University of Bologna, Bologna 40126, Italy; davide.golinelli@unibo.it

Abstract

Frauds and misconduct have been common in the history of science. Recent events connected to the COVID-19 pandemic have highlighted how the risks and consequences of this are no longer acceptable. Two papers, addressing the treatment of COVID-19, have been published in two of the most prestigious medical journals; the authors declared to have analysed electronic health records from a private corporation, which apparently collected data of tens of thousands of patients, coming from hundreds of hospitals. Both papers have been retracted a few weeks later. When such events happen, the confidence of the population in scientific research is likely to be weakened. This paper highlights how the current system endangers the reliability of scientific research, and the very foundations of the trust system on which modern healthcare is based. Having shed light on the dangers of a system without appropriate monitoring, the proposed analysis suggests to strengthen the existing journal policies and improve the research process using new technologies supporting control activities by public authorities. Among these solutions, we mention the promising aspects of the blockchain technology which seems a promising solution to avoid the repetition of the mistakes linked to the recent and past history of research.

Fraud in science: a plea for a new culture in research

European Journal of Clinical Nutrition 68, 411–415 (2014)

Fraud and Deceit in Medical ResearchInsights and Current Perspectives

Photo by Agni B on Unsplash

The characteristics of scientific fraud and its impact on medical research are in general not well known. However, the interest in the phenomenon has increased steadily during the last decade. Biostatisticians routinely work closely with physicians and scientists in many branches of medical research and have therefore unique insight into data. In addition, they have methodological competence to detect fraud and could be expected to have a professional interest in valid results. Biostatisticians therefore are likely to provide reliable information on the characteristics of fraud in medical research. The objective of this survey of biostatisticians, who were members of the International Society for Clinical Biostatistics, was to assess the characteristics of fraud in medical research. The survey was performed between April and July 1998. The participation rate was only 37%. We report the results because a majority (51%) of the participants knew about fraudulent projects, and many did not know whether the organization they work for has a formal system for handling suspected fraud or not. Different forms of fraud (e.g., fabrication and falsification of data, deceptive reporting of results, suppression of data, and deceptive design or analysis) had been observed in fairly similar numbers. We conclude that fraud is not a negligible phenomenon in medical research, and that increased awareness of the forms in which it is expressed seems appropriate. Further research, however, is needed to assess the prevalence of different types of fraud, as well as its impact on the validity of results published in the medical literature. Control Clin Trials 2000;21:415–427

Fake scientific papers are alarmingly common

But new tools show promise in tackling growing symptom of academia’s “publish or perish” culture

Research Fraud: When Science Goes Bad

China’s fake science industry: how ‘paper mills’ threaten progress

Abstract

A flurry of discussions about plagiarism and predatory publications in recent times has brought the issue of scientific misconduct in India to the fore. The debate has framed scientific misconduct in India as a recent phenomenon. This article questions that framing, which rests on the current tendency to define and police scientific misconduct as a matter of individual behavior. Without ignoring the role of individuals, this article contextualizes their actions by calling attention to the conduct of the institutions, as well as social and political structures that are historically responsible for governing the practice of science in India since the colonial period. Scientific (mis)conduct, in other words, is here examined as a historical phenomenon borne of the interaction between individuals’ aspirations and the systems that impose, measure, and reward scientific output in particular ways. Importantly, historicizing scientific misconduct in this way also underscores scientist-driven initiatives and regulatory interventions that have placed India at the leading edge of reform. With the formal establishment of the Society for Scientific Values in 1986, Indian scientists became the first national community worldwide to monitor research integrity in an institutionally organized way.

Russian journals retract more than 800 papers after ‘bombshell' investigation

Academy commission's probe of domestic journals causes "conflict and tension"

- All human activity is associated with misconduct, and as scientific research is a global activity, research misconduct is a global problem.

- Studies conducted mostly in high-income countries suggest that 2%–14% of scientists may have fabricated or falsified data and that a third to three-quarters may be guilty of “questionable research practices.”

- The few data available from low- and middle-income countries (LMICs) suggest that research misconduct is as common there as in high-income countries, and there have been high profile cases of misconduct from LMICs.

- A comprehensive response to misconduct should include programmes of prevention, investigation, punishment, and correction, and arguably no country has a comprehensive response, although the US, the Scandinavian Countries, and Germany have formal programmes.

- China has created an Office of Scientific Research Integrity Construction and begun a comprehensive response to research misconduct, but most LMICs have yet to mount a response.

Fraud in science is alarmingly common. Sometimes researchers lie about results and invent data to win funding and prestige. Other times, researchers might pay to stage and publish entirely bogus studies to win an undeserved pay rise – fuelling a “paper mill” industry worth an estimated €1 billion a year.

Some of this rubbish can be easily spotted by peer reviewers, but the peer review system has become badly stretched by ever-rising paper numbers. And there’s a new threat, as more sophisticated AI is able to generate plausible scientific data.

The latest idea among academic publishers is to use automated tools to screen all papers submitted to scientific journals for telltale signs. However, some of these tools are easy to fool.

I am part of a group of multidisciplinary scientists working to tackle research fraud and poor practice using metascience or the “science of science”. Ours is a new field, but we already have our own society and our members have worked with funders and publishers to investigate improvements to research practice.

Europe must address research misconduct

Health research is based on trust. Health professionals and journal editors reading the results of a clinical trial assume that the trial happened and that the results were honestly reported. But about 20% of the time, said Ben Mol, professor of obstetrics and gynaecology at Monash Health, they would be wrong. As I’ve been concerned about research fraud for 40 years, I wasn’t that surprised as many would be by this figure, but it led me to think that the time may have come to stop assuming that research actually happened and is honestly reported, and assume that the research is fraudulent until there is some evidence to support it having happened and been honestly reported. The Cochrane Collaboration, which purveys “trusted information,” has now taken a step in that direction.

As he described in a webinar last week, Ian Roberts, professor of epidemiology at the London School of Hygiene & Tropical Medicine, began to have doubts about the honest reporting of trials after a colleague asked if he knew that his systematic review showing the mannitol halved death from head injury was based on trials that had never happened. He didn’t, but he set about investigating the trials and confirmed that they hadn’t ever happened. They all had a lead author who purported to come from an institution that didn’t exist and who killed himself a few years later. The trials were all published in prestigious neurosurgery journals and had multiple co-authors. None of the co-authors had contributed patients to the trials, and some didn’t know that they were co-authors until after the trials were published. When Roberts contacted one of the journals the editor responded that “I wouldn’t trust the data.” Why, Roberts wondered, did he publish the trial? None of the trials have been retracted.

Later Roberts, who headed one of the Cochrane groups, did a systematic review of colloids versus crystalloids only to discover again that many of the trials that were included in the review could not be trusted. He is now sceptical about all systematic reviews, particularly those that are mostly reviews of multiple small trials. He compared the original idea of systematic reviews as searching for diamonds, knowledge that was available if brought together in systematic reviews; now he thinks of systematic reviewing as searching through rubbish. He proposed that small, single centre trials should be discarded, not combined in systematic reviews.

Mol, like Roberts, has conducted systematic reviews only to realise that most of the trials included either were zombie trials that were fatally flawed or were untrustworthy. What, he asked, is the scale of the problem? Although retractions are increasing, only about 0.04% of biomedical studies have been retracted, suggesting the problem is small. But the anaesthetist John Carlisle analysed 526 trials submitted to Anaesthesia and found that 73 (14%) had false data, and 43 (8%) he categorised as zombie. When he was able to examine individual patient data in 153 studies, 67 (44%) had untrustworthy data and 40 (26%) were zombie trials. Many of the trials came from the same countries (Egypt, China, India, Iran, Japan, South Korea, and Turkey), and when John Ioannidis, a professor at Stanford University, examined individual patient data from trials submitted from those countries to Anaesthesia during a year he found that many were false: 100% (7/7) in Egypt; 75% (3/ 4) in Iran; 54% (7/13) in India; 46% (22/48) in China; 40% (2/5) in Turkey; 25% (5/20) in South Korea; and 18% (2/11) in Japan. Most of the trials were zombies. Ioannidis concluded that there are hundreds of thousands of zombie trials published from those countries alone.

Others have found similar results, and Mol’s best guess is that about 20% of trials are false. Very few of these papers are retracted.

We have long known that peer review is ineffective at detecting fraud, especially if the reviewers start, as most have until now, by assuming that the research is honestly reported. I remember being part of a panel in the 1990s investigating one of Britain’s most outrageous cases of fraud, when the statistical reviewer of the study told us that he had found multiple problems with the study and only hoped that it was better done than it was reported. We asked if had ever considered that the study might be fraudulent, and he told us that he hadn’t.

We have now reached a point where those doing systematic reviews must start by assuming that a study is fraudulent until they can have some evidence to the contrary. Some supporting evidence comes from the trial having been registered and having ethics committee approval. Andrew Grey, an associate professor of medicine at the University of Auckland, and others have developed a checklist with around 40 items that can be used as a screening tool for fraud (you can view the checklist here). The REAPPRAISED checklist (Research governance, Ethics, Authorship, Plagiarism, Research conduct, Analyses and methods, Image manipulation, Statistics, Errors, Data manipulation and reporting) covers issues like “ethical oversight and funding, research productivity and investigator workload, validity of randomisation, plausibility of results and duplicate data reporting.” The checklist has been used to detect studies that have subsequently been retracted but hasn’t been through the full evaluation that you would expect for a clinical screening tool. (But I must congratulate the authors on a clever acronym: some say that dreaming up the acronym for a study is the most difficult part of the whole process.)

Roberts and others wrote about the problem of the many untrustworthy and zombie trials in The BMJ six years ago with the provocative title: “The knowledge system underpinning healthcare is not fit for purpose and must change.” They wanted the Cochrane Collaboration and anybody conducting systematic reviews to take very seriously the problem of fraud. It was perhaps coincidence, but a few weeks before the webinar the Cochrane Collaboration produced guidelines on reviewing studies where there has been a retraction, an expression of concern, or the reviewers are worried about the trustworthiness of the data.

Retractions are the easiest to deal with, but they are, as Mol said, only a tiny fraction of untrustworthy or zombie studies. An editorial in the Cochrane Library accompanying the new guidelines recognises that there is no agreement on what constitutes an untrustworthy study, screening tools are not reliable, and “Misclassification could also lead to reputational damage to authors, legal consequences, and ethical issues associated with participants having taken part in research, only for it to be discounted.” The Collaboration is being cautious but does stand to lose credibility—and income—if the world ceases to trust Cochrane Reviews because they are thought to be based on untrustworthy trials.

Research fraud is often viewed as a problem of “bad apples,” but Barbara K Redman, who spoke at the webinar insists that it is not a problem of bad apples but bad barrels if not, she said, of rotten forests or orchards. In her book Research Misconduct Policy in Biomedicine: Beyond the Bad-Apple Approach she argues that research misconduct is a systems problem—the system provides incentives to publish fraudulent research and does not have adequate regulatory processes. Researchers progress by publishing research, and because the publication system is built on trust and peer review is not designed to detect fraud it is easy to publish fraudulent research. The business model of journals and publishers depends on publishing, preferably lots of studies as cheaply as possible. They have little incentive to check for fraud and a positive disincentive to experience reputational damage—and possibly legal risk—from retracting studies. Funders, universities, and other research institutions similarly have incentives to fund and publish studies and disincentives to make a fuss about fraudulent research they may have funded or had undertaken in their institution—perhaps by one of their star researchers. Regulators often lack the legal standing and the resources to respond to what is clearly extensive fraud, recognising that proving a study to be fraudulent (as opposed to suspecting it of being fraudulent) is a skilled, complex, and time consuming process. Another problem is that research is increasingly international with participants from many institutions in many countries: who then takes on the unenviable task of investigating fraud? Science really needs global governance.

Everybody gains from the publication game, concluded Roberts, apart from the patients who suffer from being given treatments based on fraudulent data.

Stephen Lock, my predecessor as editor of The BMJ, became worried about research fraud in the 1980s, but people thought his concerns eccentric. Research authorities insisted that fraud was rare, didn’t matter because science was self-correcting, and that no patients had suffered because of scientific fraud. All those reasons for not taking research fraud seriously have proved to be false, and, 40 years on from Lock’s concerns, we are realising that the problem is huge, the system encourages fraud, and we have no adequate way to respond. It may be time to move from assuming that research has been honestly conducted and reported to assuming it to be untrustworthy until there is some evidence to the contrary.

Richard Smith was the editor of The BMJ until 2004.

The Non-Integrated Self

Lessons from narcissists and sociopaths.

- Our internal landscape can dictate our behavior, often without us consciously realizing it.

- People, including sociopaths and narcissists, lead secret lives that contradict their openly stated beliefs.

- Developing an "Adult Mind" can guide us toward consistent behavior with our true values and aspirations.

The typical sociopath gets caught in an affair, a financial scandal, or just a lie. Their response? How do I wiggle my way out of this? A pragmatic, amoral decision.

The typical narcissist gets caught in an affair, financial scandal, or just a lie. Their response? Attack the person that exposed them. Make that other person suffer.

A person [with integrity] gets caught in an affair, financial scandal, or just a lie. Their response? Guilt and shame; a wish to make amends. The need to do something because it feels terrible.

What can we learn here?

- Our internal landscape can dictate our behavior, often without us consciously realizing it.

- People, including sociopaths and narcissists, lead secret lives that contradict their openly stated beliefs.

- Developing an "Adult Mind" can guide us toward consistent behavior with our true values and aspirations.

The typical sociopath gets caught in an affair, a financial scandal, or just a lie. Their response? How do I wiggle my way out of this? A pragmatic, amoral decision.

The typical narcissist gets caught in an affair, financial scandal, or just a lie. Their response? Attack the person that exposed them. Make that other person suffer.

A person [with integrity] gets caught in an affair, financial scandal, or just a lie. Their response? Guilt and shame; a wish to make amends. The need to do something because it feels terrible.

The Interior Landscape

We are far less integrated than we would like to think.

What I mean by this is that our notion of self is somewhat of an illusion, although with practical value. It is useful to believe that you are in charge of yourself when the truth is less reassuring.

We think we are in charge. We think we know what we’re doing.

Unless we do a lot of internal work, we tend to operate on the surface, often under the influence of internal factors; what I like to call our "internal landscape".

Yes, internal landscape. Consider the mind like a huge swath of territory. The self we value so much only has a partial view of it. We may choose not to see the whole territory, or some may be blocked off to us, but that territory within is still there. In this regard.

Examples of places in an internal landscape:

- A little boy within was hurt.

- A little girl inside who feels displaced in her family.

- A traumatized place within that goes into flight/fight/freeze when activated.

- A powerful sense of self that “believes” it’s in charge.

- A powerful sense of helplessness that “believes” things never work out.

There are endless spots on the landscape. All within. Some remembered. Some forgotten. Ancient archaeology, modern history. All there.

In good psychotherapy, these places get exposed. We make friends with them. They’re not going away, but they can be rearranged and minimized the more we understand them and navigate around them.

We are far less integrated than we would like to think.

What I mean by this is that our notion of self is somewhat of an illusion, although with practical value. It is useful to believe that you are in charge of yourself when the truth is less reassuring.

We think we are in charge. We think we know what we’re doing.

Unless we do a lot of internal work, we tend to operate on the surface, often under the influence of internal factors; what I like to call our "internal landscape".

Yes, internal landscape. Consider the mind like a huge swath of territory. The self we value so much only has a partial view of it. We may choose not to see the whole territory, or some may be blocked off to us, but that territory within is still there. In this regard.

Examples of places in an internal landscape:

- A little boy within was hurt.

- A little girl inside who feels displaced in her family.

- A traumatized place within that goes into flight/fight/freeze when activated.

- A powerful sense of self that “believes” it’s in charge.

- A powerful sense of helplessness that “believes” things never work out.

There are endless spots on the landscape. All within. Some remembered. Some forgotten. Ancient archaeology, modern history. All there.

In good psychotherapy, these places get exposed. We make friends with them. They’re not going away, but they can be rearranged and minimized the more we understand them and navigate around them.

Mouse Eye/Eagle Eye

Consider an ancient piece of wisdom exemplified by the term "mouse eye". Seeing things close up, they appear huge and overwhelming. Mouse eye sees without perspective and with overwhelming immediacy.

We live our lives very much in mouse eye.

Then consider another idea: "eagle eye". Seeing things from up above, the big picture. Eagles have precision sight to accompany their advantage in height. To see with eagle eye often takes psychotherapy or intense self-reflection.

We all lead secret lives, often contradicting our own stated beliefs and values. We break diets while pretending to adhere, engage in gossip we condemn, feign knowledge, and seek attention while denying vanity. Greed subtly influences us, and addictions may lure us.

This clandestine existence thrives as we ignore our internal conflicts.

Unresolved traumas can also manifest in overreactions—an aspect of our behavior we often overlook or dismiss.

Consider an ancient piece of wisdom exemplified by the term "mouse eye". Seeing things close up, they appear huge and overwhelming. Mouse eye sees without perspective and with overwhelming immediacy.

We live our lives very much in mouse eye.

Then consider another idea: "eagle eye". Seeing things from up above, the big picture. Eagles have precision sight to accompany their advantage in height. To see with eagle eye often takes psychotherapy or intense self-reflection.

We all lead secret lives, often contradicting our own stated beliefs and values. We break diets while pretending to adhere, engage in gossip we condemn, feign knowledge, and seek attention while denying vanity. Greed subtly influences us, and addictions may lure us.

This clandestine existence thrives as we ignore our internal conflicts.

Unresolved traumas can also manifest in overreactions—an aspect of our behavior we often overlook or dismiss.

What Narcissists and Sociopaths Teach Us

Individuals with narcissistic or sociopathic traits often lead secret lives. Narcissists, with their brittle egos and sense of entitlement, use others for personal success. Similarly, sociopaths can contribute to their communities while exploiting them. Their ego is more robust, but they lack a sense of responsibility, focusing solely on winning without guilt or shame.

When a narciSSist's secret life is exposed, they can't reconcile guilt internally due to their brittle ego. They are intolerant to criticism, causing them to react viciously when exposed.

SSociopaths are aware of their actions and indifferent to guilt or shame.

They view people as pawns to achieve victory and can examine their internal landscape, rationalize their behavior, or simply not care. They can display narcissistic grandiosity if it serves their agenda. Their lack of guilt and focus on winning make them challenging to treat.

Individuals with narcissistic or sociopathic traits often lead secret lives. Narcissists, with their brittle egos and sense of entitlement, use others for personal success. Similarly, sociopaths can contribute to their communities while exploiting them. Their ego is more robust, but they lack a sense of responsibility, focusing solely on winning without guilt or shame.

When a narciSSist's secret life is exposed, they can't reconcile guilt internally due to their brittle ego. They are intolerant to criticism, causing them to react viciously when exposed.

SSociopaths are aware of their actions and indifferent to guilt or shame.

They view people as pawns to achieve victory and can examine their internal landscape, rationalize their behavior, or simply not care. They can display narcissistic grandiosity if it serves their agenda. Their lack of guilt and focus on winning make them challenging to treat.

They Came Before The Bible: The Absence of Evidence is the Evidence of Plagiarism

This book will compare and contrast the fictionalized characters in the Bible and compare them to other people in other cultures who actually existed and or has had their stories taken from them and inserted into the Hebrew Bible. From the writing of Moses in the 1st 5 books, which includes Adam and Eve and the Serpent, to the mythological story of Jesus, and where these stories originated. Unveiling ancient stories that predate the Bible itself.