With little knowledge comes great confidence: Study reveals relationship between knowledge and attitudes toward science

Overconfidence has long been recognized as a critical problem in judgment and decision making.

According to Dr. Cristina Mendonça, one of the lead authors of a new study published in Nature Human Behavior, "Overconfidence occurs when individuals subjectively assess their aptitude to be higher than their objective accuracy [and] has long been recognized as a critical problem in judgment and decision making. Past research has shown that miscalibrations in the internal representation of accuracy can have severe consequences but how to gauge these miscalibrations is far from trivial."

In the case of scientific knowledge, overconfidence might be particularly significant, as the lack of awareness of one's own ignorance can impact behaviors, pose risks to public policies, and even jeopardize health.

In the study published today, researchers examined four large surveys conducted over a span of 30 years in Europe and the U.S., and sought to develop a novel confidence metric that would be indirect, independent across scales, and applicable to diverse contexts.

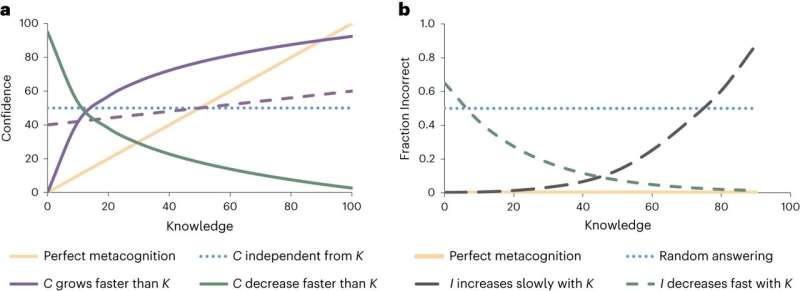

The research team used surveys with the format "True," "False," "Don't know" and devised a ratio of incorrect to "Don't Know" answers as an overconfidence metric, positing that incorrect answers could indicate situations where respondents believed they knew the answer but were mistaken, thus demonstrating overconfidence. In the words of Dr. Mendonça, "This metric has the advantages of being easy to replicate and not requiring individuals to compare themselves to others nor to explicitly state how confident they are."

The results revealed two key findings. First, overconfidence tended to grow faster than knowledge, reaching its peak at intermediate levels of knowledge. Second, respondents with intermediate knowledge and high confidence also displayed the least positive attitudes towards science.

According to André Mata, one of the authors of the study, "This combination of overconfidence and negative attitudes towards science is dangerous, as it can lead to the dissemination of false information and conspiracy theories, in both cases with great confidence."

To validate their conclusions, the researchers developed a new survey, quantitatively analyzed the work of other colleagues and used two direct, non-comparative metrics of trust, which confirmed the trend that trust increases faster than knowledge.

The implications of these findings are far-reaching and challenge conventional assumptions about science communication strategies.

According to the study coordinator Dr. Gonçalves-Sá, "Science communication and outreach often prioritize simplifying scientific information for broader audiences. While presenting simplified information might offer a basic level of knowledge, it could also lead to increased overconfidence gaps among those with some (albeit little) knowledge. There is a common sense idea that 'a little knowledge is a dangerous thing' and, at least in the case of scientific knowledge that might very well be the case."

Thus, the study suggests that efforts to promote knowledge, if not accompanied by an equivalent effort to convey a certain awareness of how much remains to be understood, can have unexpected effects. It also suggests that interventions should be targeted at individuals with intermediate knowledge, since they make up the majority of the population and tend to have the least positive attitudes towards science.

Nevertheless, the researchers caution that their confidence metric might not generalize to topics outside of scientific knowledge and surveys that penalize wrong answers heavily. The study also does not imply causality, and individual and cultural differences were observed.

Overall, this paper calls for further exploration of integrative metrics that can accurately measure both knowledge and confidence while considering potential differences in constructs.

Quitting my Ph.D. was hard. But it led me down a better path

Weekend reads: ‘The band of debunkers’; a superconductor retraction request; ‘the banality of bad-faith science’

The week at Retraction Watch featured:

- Yale professor’s book ‘systematically misrepresents’ sources, review claims

- Nature flags doubts over Google AI study, pulls commentary

- Anthropology groups cancel conference panel on why biological sex is “necessary” for research

- After resigning en masse, math journal editors launch new publication

We also added The Retraction Watch Mass Resignations List.

Our list of retracted or withdrawn COVID-19 papers is up to well over 350. There are more than 43,000 retractions in The Retraction Watch Database — which is now part of Crossref. The Retraction Watch Hijacked Journal Checker now contains over 200 titles. And have you seen our leaderboard of authors with the most retractions lately — or our list of top 10 most highly cited retracted papers?

Here’s what was happening elsewhere (some of these items may be paywalled, metered access, or require free registration to read):

- “If you take the sleuths out of the equation, it’s very difficult to see how most of these retractions would have happened.”

- “Co-Authors Seek to Retract Paper Claiming Superconductor Breakthrough.”

- “The Banality of Bad-Faith Science: Not every piece of published research needs to be heartfelt.”

- “Why Crossref’s acquisition of the Retraction Watch database is a big step forward.”

- As Cyriac Abby Philips’ X account is suspended by court order, read about the time Elsevier retracted a paper he’d written after legal threats from Herbalife.

- “China set to outlaw use of chatbots to write dissertations.”

- “When authors play the predatory journals’ own game.”

- “Science will suffer if we fail to preserve academic integrity.”

- “Three ways to make peer review fairer, better and easier.”

- “Can generative AI add anything to academic peer review?”

- “While data citation is increasingly well-established, software citation is rapidly maturing.”

- A discussion of publication misconduct.

- “Where’s the Proof? NSF OIG Provides Insights on Crafting That All-Important Investigation Report.”

- “The Dos and Don’ts of Peer Reviewing.”

- “Maternal health points to need for oversight on scientific research.”

- “The INSPECT-SR project will develop a tool to assess the trustworthiness of RCTs in systematic reviews of healthcare related interventions.”

- “There are several pitfalls in the publication process that researchers can fall victim to, and these can occur knowingly or unknowingly.” The view from a journal.

- “Peer Review and Scientific Publication at a Crossroads: Call for Research for the 10th International Congress on Peer Review and Scientific Publication.”

- “[T]he number of retractions in Spanish research grows.”

- “‘We’re All Using It’: Publishing Decisions Are Increasingly Aided by AI. That’s Not Always Obvious.”

- “Key findings include that 72% of participants agreed there was a reproducibility crisis in biomedicine, with 27% of participants indicating the crisis was ‘significant’.”

- “Should research results be published during Ph.D. studies?”

- “An Overdue Due Process for Research Misconduct.” And: “Harvard Should Protect Whistleblowers.”

- “Journals That Ban Replications–Are They Serious Scholarly Outlets At All?”

- “Replication games: how to make reproducibility research more systematic.”

- “This retraction is in acknowledgement of the fact that the publication was incomplete, inaccurate and misleading due to misapprehension of the facts as established during the parliamentary hearings on the transaction.”

- “Ex-Tory MP threatens to sue University after being named in slavery research.”

- Missed our webinar with Crossref about an exciting development for The Retraction Watch Database? Watch here.

No comments:

Post a Comment